Professor James Wilsdon discusses the use of metrics to assess potential contribution of research excellence and impact.

James Wilsdon is Professor of Science and Democracy in the Science Policy Research Unit at the University of Sussex, and chair of the Independent Review of the Role of Metrics in Research Assessment. More information on the metrics review can be found here.

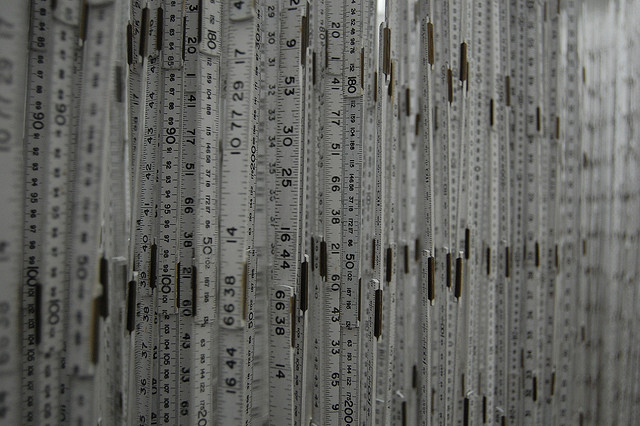

Citations, journal impact factors, H-indices, even tweets and Facebook likes – there is no end of quantitative measures that can now be used to assess the quality and wider impacts of research. But how robust and reliable are such metrics, and what weight – if any –should we give them in the management of the UK’s research system?

These are some of the questions that are currently being examined by an Independent Review of the Role of Metrics in Research Assessment , which I am chairing, and which includes representatives of the Royal Society, British Academy, Research Councils UK and Wellcome Trust. The review was announced by David Willetts, then Minister for Universities and Science, in April 2014, and is being supported by HEFCE (Higher Education Funding Council for England).

Our work builds on an earlier pilot exercise in 2008-9, which tested the potential for using bibliometric indicators of research quality in the Research Excellence Framework (REF). At that time, it was concluded that citation information was insufficiently robust to be used formulaically or as a primary indicator of quality, but that there might be scope for it to enhance processes of expert review.

The current review is taking a broader look at this terrain, by exploring the use of metrics across different academic disciplines, and assessing their potential contribution to the development of research excellence and impact within higher education, and in processes of research assessment like the REF. It’s also looking at how universities themselves use metrics, at the rise of league tables and rankings, at the relationship between metrics and issues of equality and diversity, and at the potential for ‘gaming’ and other perverse consequences that can arise from the use of particularly indicators in the funding system.

Last summer, we issued a call for evidence and received a total of 153 responses from across the HE and research community. 57 per cent of these responses expressed overall scepticism about the further introduction of metrics into research assessment, a fifth supported their increased use and a quarter were ambivalent. We’ve also run a series of workshops, undertaken a detailed literature review, and carried out a quantitative correlation exercise, to see how the results of REF 2014 might have differed had the exercise relied purely on metrics, rather than on expert peer review.

Our final report, entitled ‘The Metric Tide’ will be published on 9 July 2015. But ahead of that, we’ve recently announced emerging findings, in respect of the future of the REF. Some see the greater use of metrics as a way of reducing the costs and administrative burden of the REF. Our view is that is it not currently feasible to assess the quality and impact of research outputs using quantitative indicators alone. Around the edges of the exercise, more use of quantitative data should be encouraged as a contribution to the peer review process. But no set of numbers, however broad, is likely to be able to capture the multifaceted and nuanced judgements on the UK’s research base that the REF currently provides.

So if you’ve been pimping and priming your H-Index in anticipation of a metrics-only REF, I’m afraid our review will be a disappointment. Metrics cannot and should not be used as a substitute for informed judgement. But in our final report, we will say a lot more about how quantitative data can be used intelligently and appropriately to support expert assessment, in the design and operation of our research system.