Links to external sources may no longer work as intended. The content may not represent the latest thinking in this area or the Society’s current position on the topic.

Attention to sound

Theo Murphy international scientific meeting organised by Dr Alain de Cheveigné, Professor Maria Chait and Dr Malcolm Slaney.

Some sounds are safe to ignore, others require attention. New paradigms and analysis techniques are emerging that enhance our understanding of how the auditory brain makes this choice, and pave the way for novel applications such as the cognitive control of a hearing aid. We gathered neuroscientists, experts in brain signal encoding, and people involved in developing and marketing devices.

The schedule of talks and speaker abstracts and biographies are below. Recorded audio of the presentations is also available below.

Attendance of invited discussants supported by H2020 project COCOHA.

Confirmed invited discussants include:

- Dr Aurélie Bidet-Caulet, Lyon Neuroscience Research Centre, France

- Dr Jennifer Bizley, University College London, UK

- Dr Gregory Ciccarelli, Massachusetts Institute of Technology Lincoln Laboratory, USA

- Professor Maarten De Vos, University of Oxford, UK

- Professor Fred Dick, Birkbeck University of London and University College London, UK

- Professor Tom Francart, KU Leuven, Belgium

- Dr Jens Hjortkjær, Technical University of Denmark, Denmark

- Dr Christophe Micheyl, Starkey France, Lyon Neuroscience Research Center and Ecole Normale Supérieure, France

- Professor Lucas Parra, The City College of New York, USA

- Dr Tobias Reichenbach, Imperial College London, UK

Attending this event

This event has taken place.

Enquiries: contact the Scientific Programmes team

Organisers

Schedule

Chair

Dr Gregory Ciccarelli, Massachusetts Institute of Technology Lincoln Laboratory, USA

Dr Gregory Ciccarelli, Massachusetts Institute of Technology Lincoln Laboratory, USA

Gregory Ciccarelli earned his bachelor’s degree with honors from The Pennsylvania State University in Electrical Engineering and Computer Science in 2009, and his SM and PhD degrees from the Massachusetts Institute of Technology in 2013 and 2017, also in EECS. He currently engages in advanced signal processing research for biomedical applications with a focus on auditory attention decoding at MIT Lincoln Laboratory where he has worked since 2009. His interests are at the intersection of speech, hearing, computational neuroscience and artificial intelligence.

| 09:30 - 09:50 | Introduction | |

|---|---|---|

| 09:50 - 10:10 |

Towards intention controlled hearing aids: experiences from eye-controlled hearing aids

A hearing impairment causes a reduced ability to segregate acoustic sources. This gives problems in switching between and following speech streams in complex scenes with multiple talkers. Current hearing aid beamforming technologies rely on a listener’s ability to point with the head towards a source of interest. However, this is very difficult in a conversation situation with spatially separated talkers where rapid switches between talkers takes place. In this talk Professor Lunner will show that eye-gaze position signals can be picked up electrically in the ear canal through electrooculography, and that these signals can be used for fast intentional eye-gaze control towards the source of interest in a complex listening scene like a restaurant. Experiments where eye-gaze signals are combined with motion sensors and beamformers show that high benefits in form of improved speech intelligibility is possible for the hearing-impaired listeners. Results also indicate that eye-control combined with head movements is faster and more precise than head movements alone. The presentation will include several videos to show the use cases.

Professor Thomas Lunner, Eriksholm Research Centre, Denmark and Linköping University, Sweden

Professor Thomas Lunner, Eriksholm Research Centre, Denmark and Linköping University, SwedenProfessor Lunner was part of developing the world's first digital hearing aid, Digifocus, in the early 1990s. Whilst doing research at Eriksholm Research Centre, he is at the same time part-time professor at Linköping University and at the Danish Technical University. Here the aim is to develop hearing aids that can be controlled by will/intentions for fast and intuitive switching between objects of interest, by hearing aid interfaces like EarEEG. In collaboration with internationally renewed institutions like the VUMC in Amsterdam and the Linnaeus HEAD Excellence centre at Linköping University, Professor Lunner has been part of developing outcome methods like memory recall and pupillometry for testing hearing aids under ecological test conditions. Professor Lunner is currently part of EU Horizon 2020 project grants and by grants from the Swedish and Danish Research Councils and the Oticon Foundation. |

|

| 10:10 - 10:30 | Discussion | |

| 10:30 - 11:00 | Coffee | |

| 11:00 - 11:20 |

Path to product: cost, benefits and risks

For the hearing impaired listener, current hearing aid technology largely fails with the 'cocktail party problem'. In dynamic, conversational turn-taking, the intent of the listener determines the target talker. Using EEG, the measured focus of attention can be used as a proxy for intent. Other potential applications of EEG include measurement of listening effort and speech comprehension, and automated hearing aid fitting. A primary challenge for the productisation of EEG based systems is the development of an appropriate electrophysiological front-end and include: (1) sensor arrays that provides for a commercially acceptable industrial design; (2) electrodes that don’t require conductive paste but overcome the problems of electrical noise and movement artefact; and (3) platform constraints such limited power, ultra-low current implementation and A2D architectures. On-line EEG analysis is computationally expensive and requires middle tier or cloud-based platforms which are only appropriate for applications that are not time sensitive (<10 milliseconds). The analysis time window needs to be short and current Bluetooth communication protocols produce 100s milliseconds latency. Cloud computation produces additional delays dependent on the availability and quality of the cellular network. Such implementation challenges are not trivial and will require a concerted investment of resources. This approach however, has the potential to solve the principal and refractory failing of current hearing aid technology as well as enable the development of adaptive devices to much better fit individual needs.

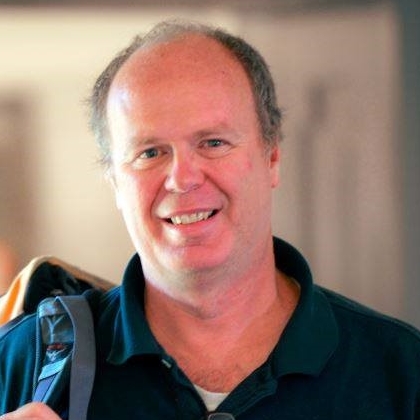

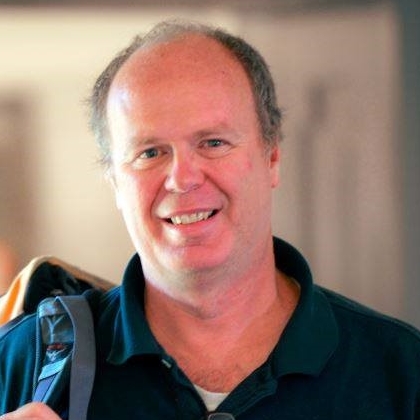

Dr Simon Carlile, Starkey Hearing Technologies, USA

Dr Simon Carlile, Starkey Hearing Technologies, USAAs Vice President for Research at Starkey Hearing Technology, Dr Carlile developed a user centered research and technology strategy to reposition the hearing aid as a gateway for health and wellness using biosensing and AI. He created and led the interdisciplinary research team of more than 40 Engineers, Hearing Scientists and Audiologists in Berkeley and Minneapolis. As CTO of the startup company, VAST Audio, Dr Carlile developed and delivered an innovative and perceptually based technology to reassert auditory spatial awareness in the hearing impaired licensed into hearing aids worldwide. Simon has a BSc (Hons) and PhD from the University of Sydney in Auditory Neuroscience, and did his postdoctoral training at University of Oxford (UK) where he was a Junior Research Fellow of Green College and a Lecturer in Neuroscience for Pembroke College. He established the Auditory Neuroscience Laboratory at the University of Sydney where he is also Professor of Neuroscience in the Faculty of Medicine. Dr Carlile has published more than 120 articles in peer-reviewed international journals, edited a foundation volume on Auditory Virtual Reality, and is Associate Editor for Nature – Scientific Reports. |

|

| 11:20 - 11:40 | Discussion | |

| 11:40 - 12:00 |

The need for auditory attention

Understanding attention is key to many auditory tasks. In this talk Dr Slaney would like to summarise several aspects of attention that have been used to better understand how humans use attention in our daily lives. This work extends from top-down and bottom-up models of attention that are useful for solving the cocktail party problem, to the use of eye-gaze and face-pose information to better understand speech in human-machine and human-human-machine interactions. The common thread throughout all this work is the use of implicit signals such as auditory saliency, face pose and eye gaze as part of a speech-processing system. Dr Slaney will show algorithms and results from speech recognition, speech understanding, addressee detection, and selecting the desired speech from a complicated auditory environment. All of this is grounded in models of auditory attention and saliency.

Dr Malcolm Slaney, Google AI Machine Hearing, USA

Dr Malcolm Slaney, Google AI Machine Hearing, USADr Malcolm Slaney is a research scientist in the AI Machine Hearing Group at Google. He is a Adjunct Professor at Stanford CCRMA, where he has led the Hearing Seminar for more than 20 years, and an Affiliate Faculty in the Electrical Engineering Department at the University of Washington. He has served as an Associate Editor of IEEE Transactions on Audio, Speech and Signal Processing and IEEE Multimedia Magazine. He has given successful tutorials at ICASSP 1996 and 2009 on 'Applications of Psychoacoustics to Signal Processing', on 'Multimedia Information Retrieval' at SIGIR and ICASSP, and 'Web-Scale Multimedia Data' at ACM Multimedia 2010. He is a coauthor, with A C Kak, of the IEEE book Principles of “Computerized Tomographic Imaging”. This book was republished by SIAM in their 'Classics in Applied Mathematics' Series. He is coeditor, with Steven Greenberg, of the book Computational Models of Auditory Function. Before joining Google, Dr Slaney has worked at Bell Laboratory, Schlumberger Palo Alto Research, Apple Computer, Interval Research, IBM’s Almaden Research Center, Yahoo! Research, and Microsoft Research. For many years, he has lead the auditory group at the Telluride Neuromorphic (Cognition) Workshop. Dr Slaney’s recent work is on understanding attention and general audio perception. He is a Senior Member of the ACM and a Fellow of the IEEE. |

|

| 12:00 - 12:20 | Discussion | |

| 12:20 - 12:40 | General discussion |

Chair

Professor Torsten Dau, Technical University of Denmark, Denmark

Professor Torsten Dau, Technical University of Denmark, Denmark

Torsten Dau was born in Hannover, Germany. He received the diploma degree in Physics at the University of Göttingen, Germany in 1992, and the Dr. rer. nat. degree in Physics at the University of Oldenburg (Germany) in 1996. From 1997-2002, Dr Dau was assistant professor (C1) at the Physics Department in Oldenburg. From 1999-2000, he was visiting scientist at the Department of Biomedical Engineering (BME) at Boston University and the Research Laboratory of Electronics (RLE) at Massachussets Institute of Technology (MIT), USA. In 2003, he received the habilitation degree (Dr. rer. nat. habil.) in Applied Physics at the University of Oldenburg. Since 2003, he has been professor for Acoustics and Audiology, head of the Centre for Applied Hearing Research (CAHR) and the Centre of Excellence for Hearing and Speech Sciences (CHeSS) as well as head of the Hearing Systems Group at the Technical University of Denmark (DTU). His main research interests are in the fields of auditory signal processing and perception, technical and clinical audiology, auditory neuroscience, neural modelling, and technical applications of auditory models in hearing technology. Dr Dau has published numerous journal and conference papers, edited ten books and organised various international conferences. He is fellow of the Acoustical Society of America and has been associate editor for several international journals.

| 14:00 - 14:20 |

The transformation from auditory to linguistic representations across auditory cortex is rapid and attention-dependent

Professor Simon shows that magnetoencephalography (MEG) responses to continuous speech can be used to directly study lexical as well as acoustic processing. Source localised MEG responses to passages from narrated stories were modelled as linear responses to multiple simultaneous predictor variables, reflecting both acoustic and linguistic properties of the stimuli. Lexical variables were modelled as an impulse at each phoneme, with values based on the phoneme cohort model, including cohort size, phoneme surprisal and cohort entropy. Results indicate significant left-lateralised effects of phoneme surprisal and cohort entropy. The response to phoneme surprisal, peaking at ~115 ms, arose from auditory cortex, whereas the response reflecting cohort entropy, peaking at ~125 ms, was more ventral, covering the superior temporal sulcus. These short latencies suggest that acoustic information is rapidly used to constrain the word currently being heard. This difference in localisation and timing are consistent with two stages during lexical processing, with phoneme surprisal being a local measure of how informative each phoneme is, and cohort entropy reflecting the state of lexical activation via lexical competition. An additional left-lateralised response to word onsets peaked at ~105 ms. The effect of selective attention was also investigated using a two speaker mixture, one attended and one ignored. Responses reflect the acoustic properties of both speakers, but reflect lexical processing only for the attended speech. While previous research has shown that responses to semantic properties of words in unattended speech are suppressed, these results indicate that even processing of word forms is restricted to attended speech.

Professor Jonathan Simon, University of Maryland, USA

Professor Jonathan Simon, University of Maryland, USAJonathan Simon earned his PhD in physics from the University of California, Santa Barbara, and did postdoctoral research in theoretical general relativity (University of Wisconsin, Milwaukee, and University of Maryland, College Park) before embracing the field of neuroscience. He joined the University of Maryland's Electrical & Computer Engineering Department in 2001, Biology Department in 2002, and Institute for Systems Research in 2013. Simon is also co-director of the KIT-Maryland Magnetoencephalography Center, and of the Computational Sensorimotor Systems Laboratory. His research focuses on neural processing in the auditory system, especially neural computations that use the temporal character of a sound. He has most recently focused on how the brain represents complex sounds such as speech, and how the brain solves the 'Cocktail Party' problem, ie, how, in a crowded and noisy environment, we can hone in on a single auditory source (eg one speaker), while simultaneously suppressing the other interfering sounds. |

|

|---|---|---|

| 14:20 - 14:40 | Discussion | |

| 14:40 - 15:00 |

Bottom-up auditory attention using complex soundscapes

Recent explorations of task-driven (top-down) attention in the auditory modality draw a picture of a dynamic system where attentional feedback modulates sensory encoding of sounds in the brain to facilitate detection of events of interest and ultimately perception especially in complex soundscapes. Complementing these processes are mechanisms of bottom-up attention that are dictated by acoustic salience of the scene itself but still engage a form of attentional feedback. Often, studies of auditory salience have relied on simplified or well-controlled auditory scenes to shed light on acoustic attributes that drive the salience of sound events. Unfortunately, the use of constrained stimuli in addition to a lack of well-established benchmarks of salience judgments hampers the development of comprehensive theories of bottom-up auditory attention. Here, Professor Elhilali will explore auditory salience in complex and natural scenes. She will discuss insights from behavioural, neural and computational explorations of bottom-up attention and their implications for our current understanding of auditory attention in the brain.

Professor Mounya Elhilali, Johns Hopkins University, USA

Professor Mounya Elhilali, Johns Hopkins University, USAMounya Elhilali received her PhD in Electrical and Computer Engineering from the University of Maryland, College Park in 2004. She is now associate professor of Electrical and Computer Engineering at John Hopkins University and a Charles Renn faculty scholar. She directs the Laboratory for Computational Audio Perception and is affiliated with the Center for Speech and Language Processing. Her research examines sound processing in noisy soundscapes and reverse engineering intelligent processing of sounds by brain networks with applications to speech technologies, audio systems and medical diagnosis. Dr Elhilali is the recipient of the National Science Foundation CAREER award and the Office of Naval Research Young Investigator award. |

|

| 15:00 - 15:20 | Discussion | |

| 15:20 - 16:00 | Coffee | |

| 16:00 - 16:20 |

Speaker-independent auditory attention decoding without access to clean speech sources

Speech perception in crowded acoustic environments is particularly challenging for hearing impaired listeners. Assistive hearing devices can suppress background noises that are sufficiently different from speech; however, they cannot lower interfering speakers without knowing the speaker on which the listener is focusing. One possible solution to determine the listener’s focus is auditory attention decoding in which the brainwaves of listeners are compared with sound sources in an acoustic scene to determine the attended source, which can then be amplified to facilitate hearing. In this talk, Professor Mesgarani addresses a major obstacle in actualising this system, which is the lack of access to clean sound sources in realistic situations where only mixed audio is available. He proposes a novel speech separation algorithm to automatically separate speakers in mixed audio without any need for prior training on the speakers. The separated speakers are compared to evoked neural responses in the auditory cortex of the listener to determine and amplify the attended speaker. These results show that auditory attention decoding with automatically separated speakers is as accurate and fast as using clean speech sounds. Moreover, Professor Mesgarani demonstrates that the proposed method significantly improves both the subjective and objective quality of the attended speaker. By combining the latest advances in speech processing technologies and brain-computer interfaces, this study addresses a major obstacle in actualisation of auditory attention decoding that can assist individuals with hearing impairment and reduce the listening effort for normal hearing subjects in adverse acoustic environments.

Professor Nima Mesgarani, Columbia University, USA

Professor Nima Mesgarani, Columbia University, USANima Mesgarani is an associate professor at Zuckerman Mind Brain Behavior Institute at Columbia University. He received his PhD from the University of Maryland. He was a postdoctoral scholar in Center for Language and Speech Processing at Johns Hopkins University, and the neurosurgery department of University of California San Francisco before joining Columbia in 2013. He was named a Pew Scholar for Innovative Biomedical Research in 2015 and received the National Science Foundation Early Career Award in 2016. |

|

| 16:20 - 16:40 | Discussion | |

| 16:40 - 17:00 |

On the encoding and decoding of natural auditory stimulus processing using EEG

Over the past few years there has been a surge in efforts to model neurophysiological responses to natural sounds. This has included a variety of methods for decoding brain signals to say something about how a person is engaging with and perceiving the auditory world. In this talk Professor Lalor will discuss recent efforts to improve these decoding approaches and broaden their utility. In particular, he will focus on three related factors: 1) how we represent the sound stimulus, 2) what features of the data we focus on, and 3) how we model the relationship between stimulus and response. Professor Lalor will present data from several recent studies in which he has used different stimulus representations, different EEG features and different modelling approaches in an attempt to lead to more useful decoding models and more interpretable encoding models of brain responses to sound.

Professor Ed Lalor, University of Rochester, USA and Trinity College Dublin, Ireland

Professor Ed Lalor, University of Rochester, USA and Trinity College Dublin, IrelandEd Lalor received the BE in electronic engineering from University College Dublin in 1998 and the MSc in electrical engineering from the University of Southern California in 1999. After working as a silicon design engineer and a primary school teacher for children with learning difficulties, Ed joined MIT's Media Lab Europe, where he investigated brain-computer interfacing and attention from 2002-2005. This led to a PhD in biomedical engineering from UCD in 2006. Subsequently, he spent two years as a postdoctoral researcher at the Nathan Kline Institute in New York and two years at Trinity College Dublin as a postdoctoral fellow. Following a brief stint at University College London, he returned to TCD as an Assistant Professor in 2011, where he established a group investigating human sensation, perception and cognition. In 2016 he joined the Departments of Biomedical Engineering and Neuroscience at the University of Rochester as an Associate Professor. |

|

| 17:00 - 17:20 | Discussion | |

| 17:20 - 17:40 | General discussion |

Chair

Professor Adrian KC Lee, University of Washington, USA

Professor Adrian KC Lee, University of Washington, USA

Adrian KC Lee is an Associate Professor in the Department of Speech & Hearing Sciences and at the Institute for Learning and Brain Sciences at the University of Washington, Seattle, USA. He obtained his bachelor’s degree in electrical engineering at the University of New South Wales and his doctorate at the Harvard-MIT Division in Health Sciences and Technology. Dr Lee’s research focuses on developing multimodal imaging techniques to investigate the cortical network involved in auditory scene analysis and attention, especially through designing novel behavioural paradigms that bridge the gap between psychoacoustics and neuroimaging research.

| 08:30 - 08:50 |

Facilitation and inhibition in visual selective attention

Visual selective attention is thought to facilitate performance both through enhancement and inhibition of sensory processing of goal-relevant and irrelevant (or distracting) information. While much insight has been gained over the past few decades into the neural mechanisms underlying facilitatory effects of attention, much less is known about inhibitory mechanisms in visual attention. In particular, it is still unclear as to whether target facilitation and distractor inhibition are simply different sides of the same coin or whether they are controlled by distinct neural mechanisms. Moreover, recent work indicates that suppression of visual distractors only emerges when information about the distractor can be derived directly from experience, consistent with a predictive coding model of expectation suppression. This also raises the question as to how visual attention and expectation interact to bias information processing. In this talk, Professor Slagter will discuss recent findings from several behavioural and EEG studies that examined how expectations about upcoming target or distractor locations and/or features influence facilitatory and inhibitory effects of attention on visual information processing and representation using ERPs, multivariate decoding analyses, and inverted encoding models. Collectively, these confirm an important role for alpha oscillatory activity in town-down biasing of visual attention to, and sharpening of representations of target locations. Yet, they also show that target facilitation and distractor suppression are differentially influenced by expectation, and rely at least in part on different neural mechanisms, with distractor suppression selectively occurring after stimulus presentation. This latter finding raises the question as to whether voluntary preparatory inhibition is possible at all.

Professor Heleen Slagter, University of Amsterdam, The Netherlands

Professor Heleen Slagter, University of Amsterdam, The NetherlandsHeleen Slagter is Associate Professor of Brain and Cognition at the University of Amsterdam, where she also received her PhD in cognitive neuroscience in 2005. After a 4-year postdoc at the University of Wisconsin-Madison, USA, she returned to Amsterdam to set up her own lab in 2009. Her research focuses on (the neural basis of) core cognitive functions, such as attention, and the plasticity of these functions. What are the mechanisms that allow us to perceive, select, suppress, and become aware of information in the environment? How does learning based on prior experience or mental training as cultivated by meditation influence these mechanisms that adaptively control information processing? Dr Slagter’s work has garnered awards and funding, including a prestigious VIDI grant by the Netherlands Organization for Scientific research, an ERC starting grant, and an early career award by the Society for Psychophysiological Research. |

|

|---|---|---|

| 08:50 - 09:10 | Discussion | |

| 09:10 - 09:30 |

Rhythmic structures in visual attention: behavioural and neural evidence

In a crowded visual scene, attention must be efficiently and flexibly distributed over time and space to accommodate different task contexts. In this talk, Professor Luo would like to present several works in the lab investigating the temporal structure of visual attention. First, by using a time-resolved behavioral measurement, the group demonstrates that attentional behavioural performance contains temporal fluctuations (theta-band, alpha-band, etc), supporting that neuronal oscillatory profile might be directly revealed at behavioural level. These behavioural oscillations display a temporal alternating relationship between locations, suggesting that attention samples multiple items in a time-based rhythmic manner. Second, by employing EEG recordings in combination with a TRF approach, the group extracted object-specific neuronal impulse responses during multi-object selective attention. The results show that attention rhythmically switches among visual objects every ~200 ms, and the spatiotemporal sampling profile adaptively changes in various task contexts. Finally, by using MEG recordings in combination with a decoding approach, the group demonstrates that attention fluctuates between attended orientation features in a theta-band rhythm, suggesting that feature-based attention is mediated by rhythmic sampling similar to that for spatial attention. In summary, attention is not stationary but dynamically samples multiple visual objects in a periodic or serial-like way. This work advocates a generally central role of temporal organisation in attention by flexibly and efficiently organising resources in time dimension.

Professor Huan Luo, Peking University, China

Professor Huan Luo, Peking University, ChinaDr Huan Luo is currently an associate professor at School of Psychological and Cognitive Sciences, Peking University, China. She is also a PI of McGovern Institute for Brain Research at PKU. Before joining PKU in 2015, she has worked at the Institute of Biophysics, Chinese Academy of Sciences (2008-2014). She got her PhD from University of Maryland College Park, USA, under the supervision of David Poeppel. Her previous work (2007-2013) used MEG recordings to explore the phase-based neural oscillatory mechanism for natural speech processing, audiovisual integration, and auditory memory. Her recent interest focuses on the temporal dynamics in visual processing, including multi-object attention, perceptual integration, and working memory, by employing various time-resolved approaches (time-resolved behavioural measurement, Meg, EEG, etc). |

|

| 09:30 - 09:50 | Discussion | |

| 09:50 - 10:20 | Coffee | |

| 10:20 - 10:40 |

Attention across sound and vision: effects of perceptual load

Load Theory of attention and cognitive control offers a hybrid model that combines capacity limits in perception with automaticity of processing. The model proposes that perception has limited capacity but proceeds automatically and involuntarily in parallel on all stimuli within capacity: relevant as well as irrelevant. Much evidence accumulated to support load theory in vision research so far. However the cross modal effects of perceptual load across the senses are less clear. In her talk, Professor Lavie will present recent work on the effects of visual perceptual load on auditory perception and the related neural activity as assessed with magnetoencephalography. The results showed that the level of unattended auditory perception and the related neural signal critically depends on the level of perceptual processing load in the visual attention task. Task conditions of high perceptual load that takes up all capacity with attended task processing, lead to reduced processing of unattended stimuli. In contrast in conditions of low perceptual load that leave spare capacity ignored task-irrelevant stimuli are nevertheless perceived, and elicit neural response. These findings demonstrate the value of understanding the role of attention in auditory processing within the framework of Load Theory.

Professor Nilli Lavie FBA, University College London, UK

Professor Nilli Lavie FBA, University College London, UKNilli Lavie is a Professor of Psychology and Brain Sciences at UCL Institute of Cognitive Neuroscience, where she heads the Attention and Cognitive Control laboratory. She is the originator of the Load Theory of attention, perception and cognitive control and renowned for having provided a resolution to the four-decades long debate in psychology on the locus of capacity limits in human information processing. She is an elected fellow of the British Academy, Royal Society of Biology, British Psychological Society, and the Association for Psychological Science, US; and an honorary life member of the Experimental Psychological Society, UK. Her current research concerns attention, perception, multi-sensory integration, emotion, and cognitive control over behaviour. She uses a combination research methods spanning neuroimaging (fMRI, VBM, EEG, spectroscopy), behavioural experiments, psychophysics, and machine learning. |

|

| 10:40 - 11:00 | Discussion | |

| 11:00 - 11:20 |

Networks controlling attention in vision (and audition)

Neuroimaging with fMRI shows that there are distinct networks biased towards the processing of visual and auditory information. These networks include inter-digitated areas in frontal cortex as well as corresponding primary and secondary sensory regions. In Professor Shinn-Cunningham's studies, she sees these distinct frontal regions consistently in individual subjects across multiple studies spanning years; however, the inter-digitated structural organization of the 'visual' and 'auditory' regions in frontal cortex is not clear using standard methods for co-registering and averaging fMRI results across subjects. Although the networks that include these inter-digitated frontal control regions are 'sensory biased', they are also recruited to process information in the other sensory modality as needed. Specifically, areas that are always engaged by auditory attention are recruited when visual tasks require processing of temporal structure, but not when the same visual inputs are accessed for tasks requiring processing of spatial information. Conversely, processing of auditory spatial information preferentially engages the visually biased brain network – a network that is traditionally associated with spatial visual attention. This visuo-spatial network includes retinotopically organised spatial maps in parietal cortex. Recent EEG results from Professor Shinn-Cunningham's lab confirm that auditory spatial attention makes use of the parietal maps in the 'visual spatial attention' network. Together, these results reveal that visual networks for attention are a shared resource used by the auditory system.

Professor Barbara Shinn-Cunningham, Carnegie Mellon University, USA

Professor Barbara Shinn-Cunningham, Carnegie Mellon University, USABarbara Shinn-Cunningham trained as an electrical engineer (Brown University, ScB; MIT, MS and PhD). Her research uses behavioural, neuroimaging, and computational methods to understand auditory processing. In 2018, she became the Director of the new Carnegie Mellon University Neuroscience Institute and Professor of the Center of Neural Basis of Cognition, Psychology, Biomedical Engineering, and Electrical & Computer Engineering at CMU. Before joining CMU, she spent 21 years on the faculty of Boston University. Her work has been recognised by the Alfred P. Sloan Foundation, the Whitaker Foundation, and the Vannevar Bush Fellows program. She is a Fellow of the Acoustical Society of America and the American Institute for Medical and Biological Engineers, and a lifetime National Associate of the National Research Council "in recognition of extraordinary service to the National Academies in its role as advisor to the Nation in matters of science." |

|

| 11:20 - 11:40 | Discussion | |

| 11:40 - 12:00 | General discussion |

Chair

Professor Stephen David,Oregon Health & Science University, USA

Professor Stephen David,Oregon Health & Science University, USA

Humans and other animals create a coherent sense of the world from a continuously changing sensory environment. To understand this process, Professor Stephen David’s lab conducts experiments that manipulate behavioural state and record the activity of neural populations during the presentation of natural and naturalistic sounds. Data from these studies is used to develop computational models of neural sound coding, with an aim of understanding communication disorders and improving engineered systems for sensory signal processing. Professor Stephen David received his AB from Harvard University in 1998 and his PhD from the University of California, Berkeley in 2005.

| 13:30 - 13:50 |

Objective, reliable, and valid? Measuring auditory attention

Auditory attention is a fascinating feat. For example, it is most astonishing how our brain 'does away' with considerable differences in sound pressure between a behaviourally relevant sound source and other interferences. Meanwhile, auditory attention has remained this elusive phenomenon: do we really understand enough just yet of auditory attention to build machines that attend, or machines that help us attend? Illustrated by behavioural, electrophysiological, and functional imaging data from his own lab and others, Professor Obleser will take stock of the evidence: are top-down selective-attention abilities indeed a stable, trait-like feature of the individual listener, with predictable decline in older adults? And, what are we really getting from our current go-to neural measures of auditory attention, speech tracking aka 'neural entrainment' versus alpha-power fluctuations? Luckily, Professor Obleser will probably be out of time as the talk reaches the main question: what are we measuring when we measure auditory attention?

Professor Jonas Obleser, University of Lübeck, Germany

Professor Jonas Obleser, University of Lübeck, GermanyJonas Obleser studies processes of auditory cognition and neuroscience. Since 2016, he has been Chair of Physiological Psychology at the University of Lübeck, Germany. After training and a PhD in Psychology at the University of Konstanz, he worked at the Institute of Cognitive Neuroscience, University College London, as well as at the Max Planck Institute in Leipzig where he set up the Research group 'Auditory Cognition'. His current research interests include neural oscillations in sensation, perception and cognition as well as executive functions like attention and memory, and how these processes interface neurally in human listeners. His research is currently being funded by the European Research Council (ERC). |

|

|---|---|---|

| 13:50 - 14:10 | Discussion | |

| 14:10 - 14:30 |

Auditory selective attention: lessons from distracting sounds

A fundamental assumption in attention research is that, since processing resources are limited, the core function of attention is to manage these resources and allocate them among concurrent stimuli or tasks, according to current behavioural goals and environmental needs. However, despite decades of research, we still do not have a full characterisation of the nature these processing limitations, or ‘bottlenecks’ – ie what processes can be in performed in parallel and where the need for attentional selection kicks in. This question is particularly pertinent in the auditory system, which has been studied far less extensively than the visual system, and is proposed to have a wider capacity for parallel processing of incoming stimuli. In this talk Dr Golumbic will discuss a series of experiments studying the depth of processing applied to task-irrelevant sounds and their neural encoding in auditory cortex. She will look at how this is affected by the acoustic properties, temporal structure, and linguistic structure of unattended sounds, as well as by overall acoustic load and task demands, in attempt to understand what levels suffer most from processing bottlenecks. In addition, she will discuss what we can learn about the capacity of parallel processing of auditory stimuli from pushing the system to its limits and requiring the division of attention among multiple concurrent inputs.

Dr Elana Golumbic, Bar Ilan University, Israel

Dr Elana Golumbic, Bar Ilan University, IsraelElana Zion Golumbic is a Senior Lecturer at the Multidisciplinary Center for Brain Research at Bar Ilan University, where she heads the Human Brain Dynamics Laboratory. Her main research interest is studying how the brain processes dynamic information under real-life conditions and environments. Research in the Human Brain Dynamics lab focuses on understanding the neural mechanisms underlying the processing of natural continuous stimuli, such as speech and music, on-line multisensory integration and focusing attention on one particular speaker in noisy and cluttered environments. Research in her lab utilises a range of techniques for recording electric and magnetic signals from the human brain (EEG, MEG and ECoG), as well as advanced psychophysical tools (eye-tracking, virtual reality, psychoacoustics). This rich methodological repertoire allows studying the system at multiple levels, and to gain a wide perspective on the link between Brain and Behaviour. |

|

| 14:30 - 14:50 | Discussion | |

| 14:50 - 15:30 | Coffee | |

| 15:30 - 15:50 |

The neuro-computational architecture of auditory attention

Auditory attention is a crucial component of real-life listening and is required, for instance, to enhance a particularly relevant aspect of a sound or to separate a sound of interest from noisy backgrounds. When listening to simple tones, attending to a certain frequency range induces a rapid and specific adaptation of neuronal tuning, which ultimately results in enhanced processing of that frequency range and suppression of the other frequencies. But which are the neural mechanisms enabling attentive selection and enhancement when listening to complex real-life sounds and scenes? At which levels of neural sound representation does attention operate? And how do these mechanisms depend on the specific behavioural requirements? High-resolution fMRI and computational modelling of sound representations both provide a relevant contribution to address these questions. Sub-millimetre fMRI enables distinguishing the activity and connectivity of neuronal populations across cortical layers non-invasively in humans (laminar fMRI). This is required for disentangling feedforward/feedback processing in primary and non-primary auditory areas and the communication between auditory and other areas (eg frontal areas). Modelling of sound representations allows formulating well-defined hypotheses on the nature of simple and complex features processed in the network of auditory areas and how the neural sensitivity for these features is affected by attention and behavioural task demands. The combination of laminar fMRI and sound representation models is thus ideally positioned to unravel the neural circuitry and the computational architecture of auditory attention in naturalistic listening scenarios.

Professor Elia Formisano, Maastricht University, The Netherlands

Professor Elia Formisano, Maastricht University, The NetherlandsElia Formisano is Professor of Analysis methods in Neuroimaging and Scientific Director of the Maastricht Brain Imaging Center (MBIC). He is principal investigator of the Auditory Cognition research group at the Department of Cognitive Neuroscience, Maastricht University and principal investigator of the research line Computational Biology of Neural and Genetic Systems at the Maastricht Centre for Systems biology (MaCSBio). His research aims at discovering the neural basis of human auditory perception and cognition by combining multimodal functional neuroimaging with methods of advanced signal analysis and computational modelling. He pioneered the use of functional MRI at ultra-high magnetic field and machine learning for the investigation of human audition. |

|

| 15:50 - 16:10 | Discussion | |

| 16:10 - 16:30 |

How attention modulates processing of mildly degraded speech to influence perception and memory

Professor Johnsrude and colleagues have previously demonstrated that, whereas the pattern of brain (fMRI) activity elicited by clearly spoken sentences does not seem to depend on attention, patterns are markedly different when attending or not to highly intelligible but degraded (6-band noise vocoded) sentences (Wild et al, J Neurosci, 2012). They have replicated and extended this work to sentences that, although slightly degraded (12-band noise vocoded), can be reported word-for-word with 100% accuracy. Even for these very intelligible materials, a marked dissociatation was observed in patterns of brain activity when people attended to these compared to when they were performing a multiple object tracking task. Furthermore, in both of these experiments, memory for degraded items was enhanced by attention, whereas memory for clear sentences was not, suggesting that even perfectly intelligible but degraded sentences are processed in a qualitatively different, attentionally gated, way, compared to clear sentences. Supported by a Canadian Institutes of Health Research operating grant (MOP 133450) and Canadian Natural Sciences and Engineering Research Council Discovery grant (3274292012).

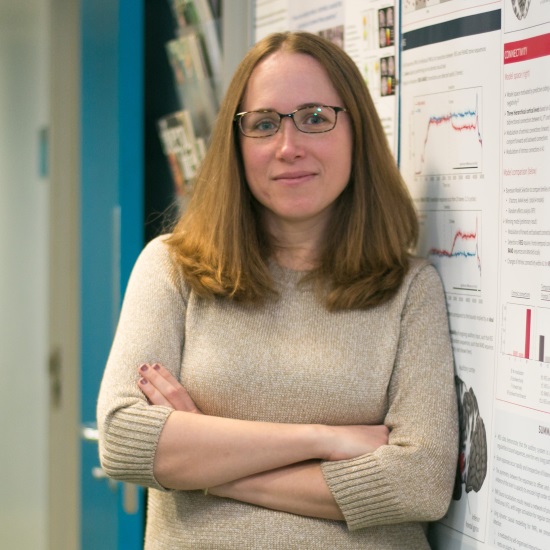

Professor Ingrid Johnsrude, Western University, Canada

Professor Ingrid Johnsrude, Western University, CanadaDr Johnsrude (PhD McGill) is Professor and Western Research Chair at Western University. She trained as a clinical neuropsychologist but for the last 20 years has been using neuroimaging (particularly EEG and fMRI) and psychoacoustic methods to study the importance of knowledge and attention in guiding auditory and speech perception. Dr Johnsrude is the author of more than 100 peer-reviewed publications which have been cited nearly 19700 times (Google Scholar). She has won multiple awards for her research, including the EWR Steacie Fellowship (2009) from the Natural Sciences and Engineering Research Council of Canada. She lives in London, Canada with her husband and two children. |

|

| 16:30 - 16:50 | Discussion | |

| 16:50 - 17:10 | General discussion | |

| 17:10 - 17:30 | Concluding session |