Public scrutiny is critical for trust in, and democratic legitimacy for, the use of data-driven decision-making and algorithmic systems in our society.

The Royal Society’s new publication, The UK data governance landscape, is a valuable resource published at a moment of immense uncertainty, as well as possibility, in the data governance ecosystem. Midway through 2020 we stand at the intersection of three monumental and ongoing ruptures: the coronavirus pandemic, which is accelerating the application of data-driven technologies (PDF) to health as well as policymaking; the Black Lives Matter movement, which is drawing long-overdue attention to the unequal distribution of the benefits of digital transformation as well the problem of bias in algorithmic systems (PDF); and the impending departure of the United Kingdom from the European Union, which is generating questions about the future of international data flows and the opportunities the UK faces to expand its leadership in artificial intelligence (AI). These three phenomena are already changing, and will transform, the data governance landscape. There is a pressing need to ensure the changes wrought benefit people and society.

The Ada Lovelace Institute was established by the Nuffield Foundation in 2018 as an independent research and deliberative body with a mission to ensure data and AI work for people and society. We are committed to addressing these challenges and realising the social value of data, our most important public good.

Filling the data gaps

As decision-making becomes increasingly data-driven it is imperative that the right data is driving it: data that is comprehensive, accurate and representative. Yet gaps in critical datasets and missing data – what Professor David Hand calls ‘dark data’ – plague the data ecosystem.

Too often, those data gaps concern the most vulnerable in society: there is no data collected on vulnerable parties or witnesses who appear before the civil courts, for example, leading senior judges to declare the domain a ‘data desert’. There is an absence of public data on cost savings won through the roll out of Universal Credit and on rental costs throughout the UK. The recent Public Health England report (PDF) into disparities in deaths from COVID-19, which found that people from BAME backgrounds are up to twice as likely to die from the disease than White Britons, was unable to draw conclusions due to a lack of data to inform them. The openSAFELY study (PDF), which analysed 17 million patient records to identify factors associated with COVID-19 deaths, found that 26% of patient records did not contain ethnicity data.

Bad – or incomplete – data will lead to badly informed decisions. It may also make for bad algorithms. Biased and non-representative datasets cause the replication of bias in algorithmic systems, as has been evidenced in research in the United States into algorithmic systems used in healthcare and criminal sentencing, and in facial recognition systems. The economic fallout from the coronavirus crisis is likely to see increased government deployment of algorithmic decision-making systems; ensuring these are free from bias will be critical to prevent data-driven decision-making from entrenching, rather than rectifying, inequalities.

Making sure the right data is in the right hands

Too often, personal data is treated as exclusive, monetised by organisations and siloed in ways that inhibit research and development for public benefit. There is an imbalance in the data ecosystem which sees the private sector enjoy a monopoly on data generated by the public. Researchers have long called for social media firms to share their data, but governments too have found themselves begging at the feet of the large tech platforms for access to data to facilitate the pandemic response.

The present crisis has demonstrated how, in times of crisis, barriers to data sharing can be temporarily lowered and data shared to great benefit. As the crisis subsides and the democratic legitimacy for exceptional measures evaporates, questions emerge about how to constitute the political will, technological capability and legal safeguards necessary to continue to pursue socially beneficial data sharing and access arrangements. The Ada Lovelace Institute’s Rethinking Data programme aims to build the institutions – technical, legal, political – necessary to ensure that data is fairly distributed and equitably enjoyed, and that public institutions are able to realise the value of data generated by the public.

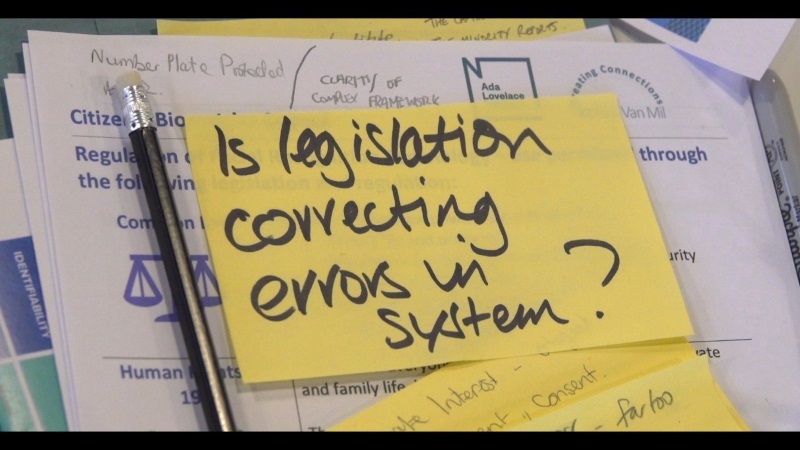

Ensuring our regulatory frameworks are fit for purpose

New and emerging concepts such as group and collective privacy (PDF) invite us to question whether additional regulatory frameworks are needed to complement existing laws - protecting privacy in a wider ecosystem where the privacy of groups, not just of individuals, is often compromised.

Other regulatory domains, such as competition law, are increasingly being shaped by the implications of our data-driven economy. The acquisitive practices of large technology companies are often criticised for being anti-competitive. However, existing competition law has struggled to articulate what it means for anti-competitive behaviour to exist in a data-enabled society (in which data-driven services rely on network effects, creating natural monopolies), where access to personal data, not price, is exchanged in return for access to platform services.

Particular types of data may also be under-protected by existing regulatory frameworks. The regulatory and policy framework for governing the use of biometric data in particular has been outpaced by advances in the technologies, such as facial recognition, that enable such data to be used by private companies or public bodies. We need to grapple with how the potential of new technologies change how certain data can be used, and to understand – through independent, impartial and evidence-led analysis – what the implications are for the regulatory landscape. On this issue the Ada Lovelace Institute has commissioned an independent review led by Matthew Ryder QC which will report in early 2021.

Much of the UK’s regulatory regime in the context of data has been led by European Union legislation, and the EU continues to be at the forefront of regulating to address harms in the digital economy. Beyond Brexit, we will need a new national vision for data regulation which articulates a positive vision for the protection and use of data for the public good while continuing to facilitate international data flows with the EU and beyond.

The next decade: challenges and opportunities

The next decade will be defined by how we respond to these three identified ruptures. If they are to have a positive long-term influence on the data governance landscape it will be because we will have heeded their lessons.

The Royal Society and British Academy’s 2017 report Data management and use: Governance in the 21st century called for a new interdisciplinary stewardship body that would be deeply connected to diverse communities, nationally focused and globally relevant. The Ada Lovelace Institute was created in response to this call. The evidence we are gathering for policy-making and regulatory reform includes scrutiny of social and technical infrastructures and systems, and – crucially – the perspectives of the those who will be most impacted: the public. This scrutiny will be critical for public trust and confidence in, and democratic legitimacy for, more widespread use of data-driven decision-making and algorithmic systems in our society.

You can download the Royal Society’s new publication The UK data governance landscape: explainer (PDF).

For another perspective on the UK's data governance landscape and the Royal Society's Data Governance Explainer, please see Using data for the public good: the roles of clear governance, good data and trustworthy institutions.